Description

Remove duplicate rows.

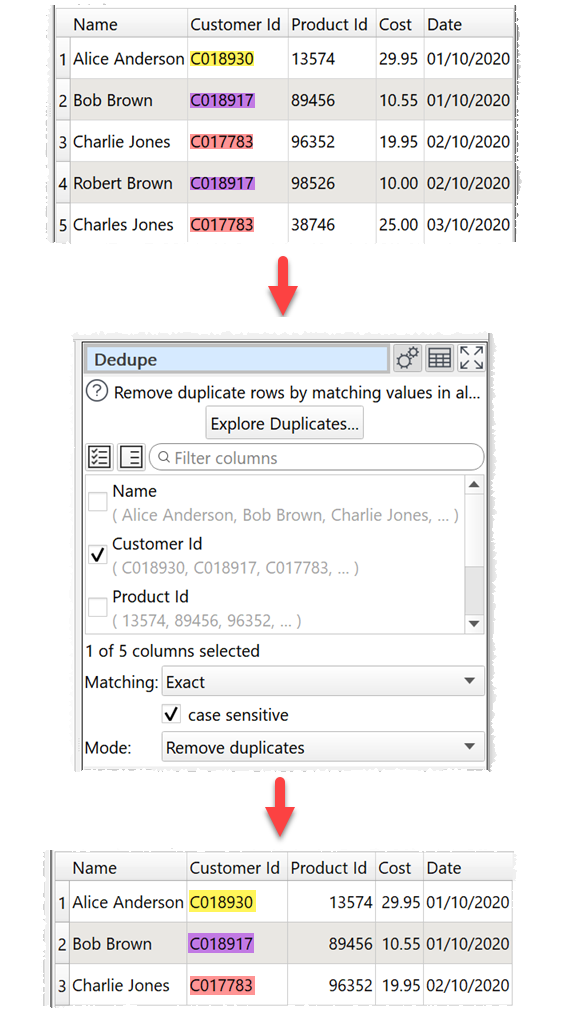

Example

Keep the first record for each unique 'Customer Id':

Inputs

One.

Options

•Click Explore Duplicates... to explore which rows are duplicates.

•Check the column(s) you wish to look for duplicate values in.

•Set Matching to Exact to use exact matching to find duplicates and Fuzzy to match with some differences. Fuzzy matching is much slower.

•Set Closeness to how close a fuzzy match has to be to be considered a match. E.g. set it to 80% to make 2 values that are 80% the same a match.

•Check case sensitive to use case sensitive matching.

•Check ignore whitespace to ignore whitespace characters when matching.

•Check ignore punctuation to ignore punctuation characters when matching.

•Set Mode according to what you want to do with duplicates.

oRemove duplicates removes all duplicate rows.

oKeep only duplicates keeps only the duplicate rows (the inverse of Remove duplicates).

oAdd duplicate information adds extra columns with information about duplicate rows at the end. Reorders rows, but does not remove them. The additional columns are:

▪Group: Each row and its duplicates are given a unique group number, starting at 1.

▪Row: The number of the row in the input dataset.

▪Closeness: How close a match the duplicate is as a percentage. 100% = exact match.

▪Duplicate: Set to true if the row is a duplicate and false if not.

▪Duplicates: Set to the number of duplicates a non-duplicate has.

•Check drilldown to allow double-clicking a row in the data table to drilldown to this row and it's duplicates in the upstream data. This is only available when Matching is set to Exact and Mode is set to Remove duplicates.

Notes

•Rows are considered duplicates if they match in all the checked columns.

•Comparisons are whitespace sensitive. You can use Whitespace to remove whitespace before deduping and Replace to get of other unwanted characters (e.g. whitespace inside the text).

•When several rows are duplicates, only the top one is retained. So you may want to Sort your dataset first.

•The Unique transform is a more powerful (but more complex and slower) alternative to Dedupe.